The rise of AI language models has changed the way online content is discovered and used. For years, search engines have relied on sitemaps to find and understand website pages. Now, a new experimental format called llms.txt aims to help Large Language Models (LLMs) identify your most valuable, high-quality content — the kind you want to be surfaced in AI responses. If you run a WordPress site, adding an llms.txt file is surprisingly straightforward. In this guide, we’ll explain exactly what it is, what it does (and doesn’t do), and walk you through step-by-step methods for setting it up using Yoast SEO, Rank Math, or a manual approach.

Table of Contents

What Is llms.txt?

Think of llms.txt it as the AI-era cousin of robots.txt. Instead of telling search engines what they can’t access, it tells AI tools what they should pay attention to. It’s a plain-text file, served at:

https://yoursite.com/llms.txt

Inside the file, you list links to your best, most authoritative, and well-structured content — ideally in formats that AI systems can easily parse, such as Markdown. You can also include short summaries or explanations to provide context for the models.

What llms.txt Is Not

It’s important to clarify: llms.txt does not block AI crawlers or prevent your content from being used for training. It is a signal, not an enforcement tool. If you want to restrict AI access, you still need to use robots.txt with opt-out rules for specific bots.

Instead, llms.txt is about guidance. It’s a way to say:

“Here’s the content I think is most relevant, accurate, and useful. Use this first.”

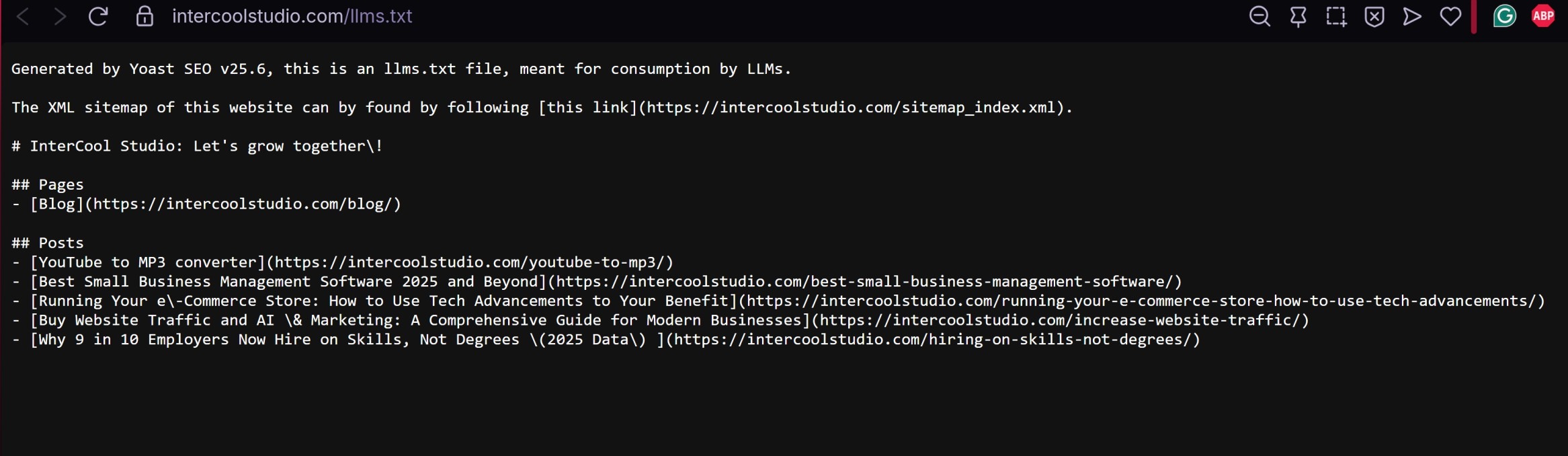

How a Good llms.txt File Looks

Here’s a basic Yoast example format:

Generated by Yoast SEO v25.6, this is an llms.txt file, meant for consumption by LLMs.

The XML sitemap of this website can by found by following [this link](https://intercoolstudio.com/sitemap_index.xml).

# InterCool Studio: Let's grow together\!

## Pages

- [Blog](https://intercoolstudio.com/blog/)

## Posts

- [YouTube to MP3 converter](https://intercoolstudio.com/youtube-to-mp3/)

- [Best Small Business Management Software 2025 and Beyond](https://intercoolstudio.com/best-small-business-management-software/)

- [Running Your e\-Commerce Store: How to Use Tech Advancements to Your Benefit](https://intercoolstudio.com/running-your-e-commerce-store-how-to-use-tech-advancements/)

- [Buy Website Traffic and AI \& Marketing: A Comprehensive Guide for Modern Businesses](https://intercoolstudio.com/increase-website-traffic/)

- [Why 9 in 10 Employers Now Hire on Skills, Not Degrees \(2025 Data\) ](https://intercoolstudio.com/hiring-on-skills-not-degrees/)

In the llms.txt format generated by Yoast SEO, the square brackets you see around text are not random symbols or some hidden crawler code — they are part of standard Markdown link formatting. In Markdown, the text inside the square brackets is the visible, clickable link text, while the URL in parentheses immediately after is the actual destination address.

For example, when you see [Blog](https://intercoolstudio.com/blog/), “Blog” is what a human or an AI reading system would interpret as the title of the link, while the URL in parentheses tells the system where that link points.

This style makes the file both human-friendly and machine-readable. A plain list of URLs would still be usable by crawlers, but it would lack descriptive labels. By including descriptive link text, you give AI crawlers and other systems extra context about what each page contains before they even visit it.

That context — for instance, “Best Small Business Management Software 2025 and Beyond” — is valuable because it immediately signals the topic and potential relevance of the content.

Yoast uses this Markdown approach because it keeps the file clean for human readers and also enriches the data for systems that understand link labels. Modern AI crawlers can process both the text inside the brackets and the URL inside the parentheses, meaning they can index your content more intelligently.

Essentially, the brackets are there to wrap a label, the parentheses are there to wrap the link, and together they form a Markdown link that is easy for both people and machines to understand.

Setting Up llms.txt in WordPress

1. Yoast SEO Method

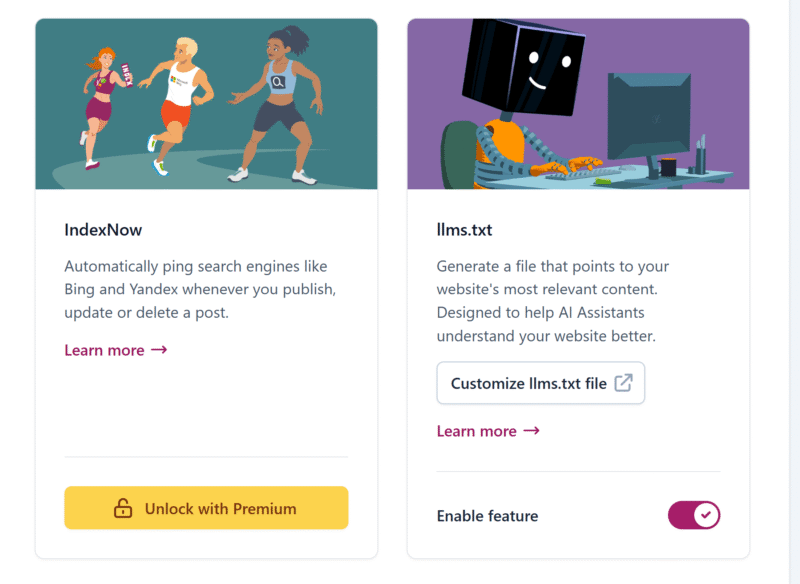

If you’re already using Yoast SEO, recent versions let you enable and edit llms.txt right in the dashboard.

Steps:

- Go to SEO → Settings → Site Features.

- Scroll to the APIs section.

- Find a section “Generate a file that points to your website’s most relevant content. Designed to help AI Assistants understand your website better. “

- Toggle llms.txt to —> ENABLE FEATURE.

- Click Customize llms.txt to choose the exact pages or posts you want included.

- Save changes — Yoast automatically generates and serves the file.

Yoast’s method is ideal if you want a quick setup with minimal manual work.

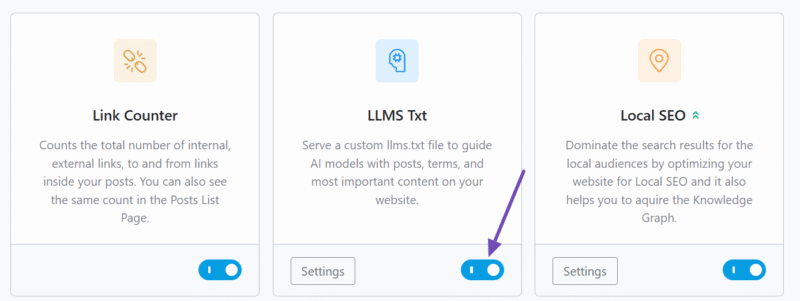

2. Rank Math Method

Rank Math also supports llms.txt with more granular controls.

Steps:

- Go to Rank Math SEO → Dashboard.

- Enable the LLMS Txt module.

- Navigate to General Settings → Edit llms.txt.

- Choose which post types, taxonomies, or custom URLs to include.

- Save changes — Rank Math will serve the file from your site root.

Rank Math is a better fit if you want fine-tuned control over exactly what appears in llms.txt.

3. Manual Setup

If you prefer complete control or don’t use an SEO plugin:

- Create a plain text file called

llms.txton your computer. - Write your content in Markdown format, linking to your most important pages.

- Upload the file to your site’s root directory (

/public_html/) via FTP or cPanel. - Test it by visiting

https://yoursite.com/llms.txtin your browser.

This approach is flexible but requires more manual upkeep. llms.txt example:

Alternative Ways to Generate an llms.txt file

Below are two robust ways to generate and maintain an llms.txt file without relying on one-size-fits-all automation. The first approach keeps everything inside WordPress with a tiny custom plugin (or code snippet) so you curate exactly what goes in. The second integrates llms.txt into modern build pipelines for headless CMS setups and static site generators, so the file is rebuilt automatically whenever content changes.

WordPress: lightweight plugin or code snippet

A minimal plugin lets you decide which URLs appear and when the file updates. It’s fast, transparent, and version-controllable. The core idea is: hook into content changes, compile a curated set of links (e.g., recent “pillar” posts or a hand-picked list), and write a Markdown-style list to /llms.txt in the site root.

How it works in practice:

Create a tiny must-use plugin (or a regular plugin) that regenerates llms.txt on publish/update and on demand. You can target only posts in specific categories or with a custom field include_in_llms=1 to keep quality high.

<?php

/**

* Plugin Name: Custom llms.txt Generator

* Description: Generates /llms.txt with curated posts in Markdown-style links (headings + links).

*/

// Rebuild on publish/update of posts you care about.

add_action('save_post_post', function ($post_id, $post, $update) {

// Only run on published posts.

if (get_post_status($post_id) !== 'publish') return;

custom_llms_generate_file();

}, 10, 3);

// Optional: manual trigger via WP-CLI -> wp llms:build

if (defined('WP_CLI') && WP_CLI) {

WP_CLI::add_command('llms:build', function () {

custom_llms_generate_file();

WP_CLI::success('llms.txt rebuilt.');

});

}

function custom_llms_generate_file() {

// Query curated content. Example: posts tagged with `pillar`.

$args = [

'post_type' => 'post',

'posts_per_page' => 50,

'tax_query' => [[

'taxonomy' => 'post_tag',

'field' => 'slug',

'terms' => ['pillar'],

]],

'orderby' => 'date',

'order' => 'DESC',

];

$q = new WP_Query($args);

$lines = [];

$lines[] = 'Generated by Custom llms.txt Generator';

$lines[] = 'The XML sitemap of this website can be found by following [this link](' . esc_url(get_home_url(null, '/sitemap_index.xml')) . ').';

$lines[] = '';

$lines[] = '# ' . get_bloginfo('name');

$lines[] = '';

$lines[] = '## Posts';

if ($q->have_posts()) {

while ($q->have_posts()) {

$q->the_post();

$title = wp_strip_all_tags(get_the_title());

$url = esc_url(get_permalink());

$lines[] = '- [' . $title . '](' . $url . ')';

}

wp_reset_postdata();

} else {

$lines[] = '- [Blog](' . esc_url(get_home_url(null, '/blog/')) . ')';

}

$content = implode("\n", $lines) . "\n";

// Write to site root. Adjust path if your docroot differs.

$root = ABSPATH . 'llms.txt';

file_put_contents($root, $content);

}

This pattern is intentionally simple. You can extend it by adding additional sections (e.g., ## Guides, ## Case Studies) or a [Pages] block that includes your blog hub. If you prefer not to rely on tags, switch to a custom field gate—only posts with meta_key=include_in_llms set to 1 make the list. To reduce noise, schedule a weekly rebuild via WP-Cron that re-sorts by performance metrics you store (e.g., a custom meta set by your analytics sync).

When you need more control but don’t want a full plugin, the same logic can live in a site-specific plugin or be split into: (1) a generator function, (2) a WP-CLI command, and (3) an admin button that rebuilds the file on click.

Headless CMS or static site generators (Next.js, Gatsby, Hugo, Jekyll)

For headless or static stacks, treat llms.txt as a build artifact. The build pipeline fetches curated entries, sorts and filters them, and emits a Markdown-style document that mirrors the Yoast-like format you prefer.

General approach in a Node/Next.js/Gatsby pipeline:

- Query your CMS (WordPress REST/GraphQL, Sanity, Contentful, Strapi) for “pillar” or “featured” content.

- Map results into the llms.txt structure (H1/H2 headings and Markdown links).

- Write

/public/llms.txt(Next/Gatsby) or the equivalent static output path. - Commit the generator script so it runs on every build in Vercel/Netlify.

Example Node script (works in Next.js/Gatsby builds):

// scripts/build-llms.js

import fs from 'fs';

import fetch from 'node-fetch';

async function main() {

// Replace with your CMS endpoint and filtering (e.g., tag=pillar).

const res = await fetch('https://example.com/wp-json/wp/v2/posts?per_page=50&tags=pillar&_fields=title,link');

const posts = await res.json();

const lines = [];

lines.push('Generated by Build Pipeline, this is an llms.txt file, meant for consumption by LLMs.');

lines.push('The XML sitemap of this website can be found by following [this link](https://example.com/sitemap_index.xml).');

lines.push('');

lines.push('# Example Site');

lines.push('');

lines.push('## Posts');

posts.forEach(p => {

const title = p.title?.rendered?.replace(/<[^>]+>/g, '') ?? 'Untitled';

const url = p.link;

lines.push(`- [${title}](${url})`);

});

const out = lines.join('\n') + '\n';

fs.writeFileSync('./public/llms.txt', out, 'utf8'); // Next.js/Gatsby public dir

console.log('llms.txt built');

}

main().catch(err => {

console.error(err);

process.exit(1);

});

Wire this into your build with a package script so every deployment refreshes the file:

{

"scripts": {

"prebuild": "node scripts/build-llms.js",

"build": "next build"

}

}

Hugo and Jekyll fit neatly into the same idea but via templating. In Hugo, create a custom output format for llms.txt and a dedicated layout that iterates through pages with params.include_in_llms = true. Hugo will emit a real file during hugo builds:

# config.toml

[outputs]

home = ["HTML", "LLMS"]

[outputFormats.LLMS]

mediaType = "text/plain"

baseName = "llms"

isPlainText = true

{{/* layouts/index.llms.txt */}}

Generated by Hugo, this is an llms.txt file, meant for consumption by LLMs.

The XML sitemap of this website can be found by following [this link]({{ .Site.BaseURL }}sitemap.xml).

# {{ .Site.Title }}

## Posts

{{ range where .Site.RegularPages "Params.include_in_llms" true }}

- [{{ .Title }}]({{ .Permalink }})

{{ end }}

Jekyll is similar: add a generator plugin or a collection, filter by a front-matter flag like include_in_llms: true, and output a single file at the site root during jekyll build.

Best Practices for llms.txt

1. Quality Over Quantity — Include Your Top 10–50 Resources Only

The llms.txt file isn’t meant to be a dumping ground for every single page on your site. Instead, it should act as a curated “AI-ready” reading list. By listing only your most authoritative, evergreen, and high-quality resources, you ensure that AI crawlers are fed the best version of your expertise.

- Why it matters: AI systems may use your llms.txt content to train models or generate answers. If you give them mediocre or outdated material, that’s what will represent you in search results and AI outputs.

- How to apply it: Focus on cornerstone articles, detailed guides, whitepapers, or top-performing blog posts. Avoid thin content, landing pages with little information, or temporary announcements.

2. Keep It Updated — Revise When You Add or Remove Major Content

A llms.txt file is not “set and forget.” As your site grows and evolves, some resources become outdated, while new ones become your top performers.

- Why it matters: If AI crawlers rely on stale links, they could spread outdated or incorrect information. This can hurt your authority.

- How to apply it: Schedule a review every quarter. Add new high-value content, and remove anything that’s been merged, retired, or significantly revised.

3. Match Your Brand Voice — Short Descriptions Should Sound Like Your Site, Not Generic Filler

If your llms.txt includes descriptions for each link (some setups allow for this), treat them as micro-marketing opportunities. These aren’t just functional labels — they can carry your tone and personality.

- Why it matters: When AI crawlers index descriptions alongside URLs, they help define your “brand voice” in AI-generated responses. A bland description makes your content less distinctive.

- How to apply it: If your brand tone is friendly, keep it friendly. If it’s expert and formal, reflect that. For example, instead of “Guide to content marketing”, use “Our definitive 2025 playbook for building unstoppable content marketing campaigns.”

4. Combine with robots.txt for a Full AI Crawling Strategy

The llms.txt file is only one part of controlling how AI crawlers interact with your site. The robots.txt file tells search engines and bots which areas they can or cannot crawl. Together, they create a two-tier system:

- robots.txt: Manages access — allows or blocks crawling.

- llms.txt: Curates what to prioritize for AI models.

- Why it matters: Without robots.txt rules, certain AI bots might still crawl pages you’d rather not share. Without llms.txt, you lose control over which pages they see first.

- How to apply it: Allow AI-friendly bots in robots.txt, but direct them toward llms.txt for your chosen resources. Block low-value or private areas (like /cart/, /wp-admin/, or member-only sections).

Testing Your File

Once published:

1. Open https://yoursite.com/llms.txt In your browser to check its accessibility

Once you create your llms.txt file and place it in your site’s root directory, the very first test is to see if it’s publicly reachable.

- Why it matters: If the file isn’t accessible, AI crawlers can’t read it — which means all your curation work goes to waste. A missing file, 404 error, or blocked directory will make it invisible to AI systems.

- How to apply it:

- Open a browser and visit

https://yoursite.com/llms.txt. - You should see plain text or a neatly formatted list of URLs and optional descriptions.

- If you get an error, check:

- The file is in the correct location (

public_htmlor your site’s root). - File permissions allow public access (e.g., 644 in most hosting setups).

- Your security plugin or firewall isn’t blocking it.

- The file is in the correct location (

- Open a browser and visit

Your result in the browser should look something like this:

2. Use an AI Assistant That Reads the Web to See If Your Listed Content Is Prioritized in Its Responses

Creating an llms.txt file is step one; verifying that it influences AI behavior is step two.

- Why it matters: You want to know whether AI crawlers and assistants are actually using your curated list when generating answers. If your best content still isn’t surfacing, you may need to adjust your list or descriptions.

- How to apply it:

- Choose an AI tool known for web access (e.g., Perplexity.ai, Bing Copilot with browsing enabled, or ChatGPT with web browsing turned on).

- Ask a question that your curated content directly answers.

- See if the AI cites or references your listed pages.

- If it doesn’t:

- Review whether your llms.txt contains the most relevant URLs for that topic.

- Ensure those pages are high-quality, SEO-friendly, and easily crawlable.

- Consider updating your llms.txt with better anchor descriptions.

Final Thoughts

llms.txt won’t magically boost your rankings overnight, but it’s a future-facing signal that could shape how AI tools use and present your content. In the same way, sitemaps helped search engines crawl smarter, llms.txt could help LLMs provide more accurate, source-rich answers — with your work front and center.

For WordPress users, Yoast and Rank Math make implementation quick and painless, so there’s little downside to setting it up today.

References:

- Yoast SEO Official Site

- Rank Math SEO Official Site

- WordPress

Andrej Fedek is the creator and the one-person owner of two blogs: InterCool Studio and CareersMomentum. As an experienced marketer, he is driven by turning leads into customers with White Hat SEO techniques. Besides being a boss, he is a real team player with a great sense of equality.